Platform

AI Security

AI Security

AI Observability

Solutions

Integrations

Resources

Every CEO has the same mandate right now: ship AI features faster. Improve efficiency. Cut costs. Use Claude, use GPT, use whatever works, just make it happen.

And AI teams are delivering. They’re building agents that automate customer support, embedding LLMs into products, and deploying AI workflows that make real decisions in production. For the first time, engineering velocity isn’t the bottleneck.

Security is.

Here’s the uncomfortable truth: security teams and AI teams don’t speak the same language.

I’ve lived this firsthand. As an AI engineer at Meta, I dreaded security and privacy reviews before prod releases. They felt like speed bumps when I just wanted to ship. But Meta had one thing right: they monitored everything in production without getting in the way.

That is the model that should exist everywhere.

For AI engineers, the metrics that matter are accuracy and latency. Did the model return the right answer? Did it do it in under 200ms? Everything else, like compliance reviews, security scans, and manual audits, feels like friction. A blocker to shipping. A checkbox that does not help them get promoted.

For security teams, AI is an uncontrollable black box. They see API keys hardcoded in repos (sometimes personal GPT or Claude API keys), PII flowing to third-party LLMs, and agents calling endpoints they have never heard of. They know the risk is real. But they do not have visibility. They do not have control. And they lack the trust of AI engineers who see them as the “department of no.”

The result? Shadow AI.

According to reports, up to 80% of AI usage in enterprises is unsanctioned. Developers are spinning up GPT integrations, calling Anthropic APIs, and building agentic workflows without security ever knowing they exist. AI engineers are downloading models and components from Hugging Face in 67% of organizations. It is not malicious. It is just faster. And in a world where every competitor is racing to ship AI, faster wins.

But faster without visibility is a ticking time bomb.

Traditional security tools were not built to understand AI at runtime.

Gateway-based security can catch users browsing to GenAI apps, but not services making direct API calls.

Code scanners can find hardcoded API keys, but not containerized services that download models at startup.

Even cloud security platforms that do runtime monitoring see network traffic, but they cannot decode AI-specific protocols or give you semantic visibility into what AI services are actually doing.

The critical gap is not just visibility. It is AI-native visibility.

You might see:

outbound HTTPS traffic to api.anthropic.comBut what you actually need to see is:

Service X sent customer PII in a Claude API call using MCPYou might catch anomalous data exfiltration, but not understand that it is an agent chain using A2A calls to orchestrate a workflow.

AI traffic flows between services, across clusters, and through third-party APIs. It is dynamic, distributed, ephemeral, and non-deterministic. Static controls and perimeter-based thinking do not survive contact with that reality.

Here is the worst part: even when security tries to help, they become the bottleneck. Every new AI integration requires a review. Every new LLM endpoint needs approval. Every agent chain needs an audit. The process is manual and slow, the exact opposite of how AI teams work.

We cannot secure AI by slowing it down.

The breakthrough came from a simple realization:

Security for AI cannot be a gate. It has to be a mirror.

AI teams will always prioritize speed and accuracy. That is their job. We should not try to change that.

Instead, security has to work invisibly, monitoring everything in real time, without code changes, without extra latency, and without sending developers into yet another compliance workflow.

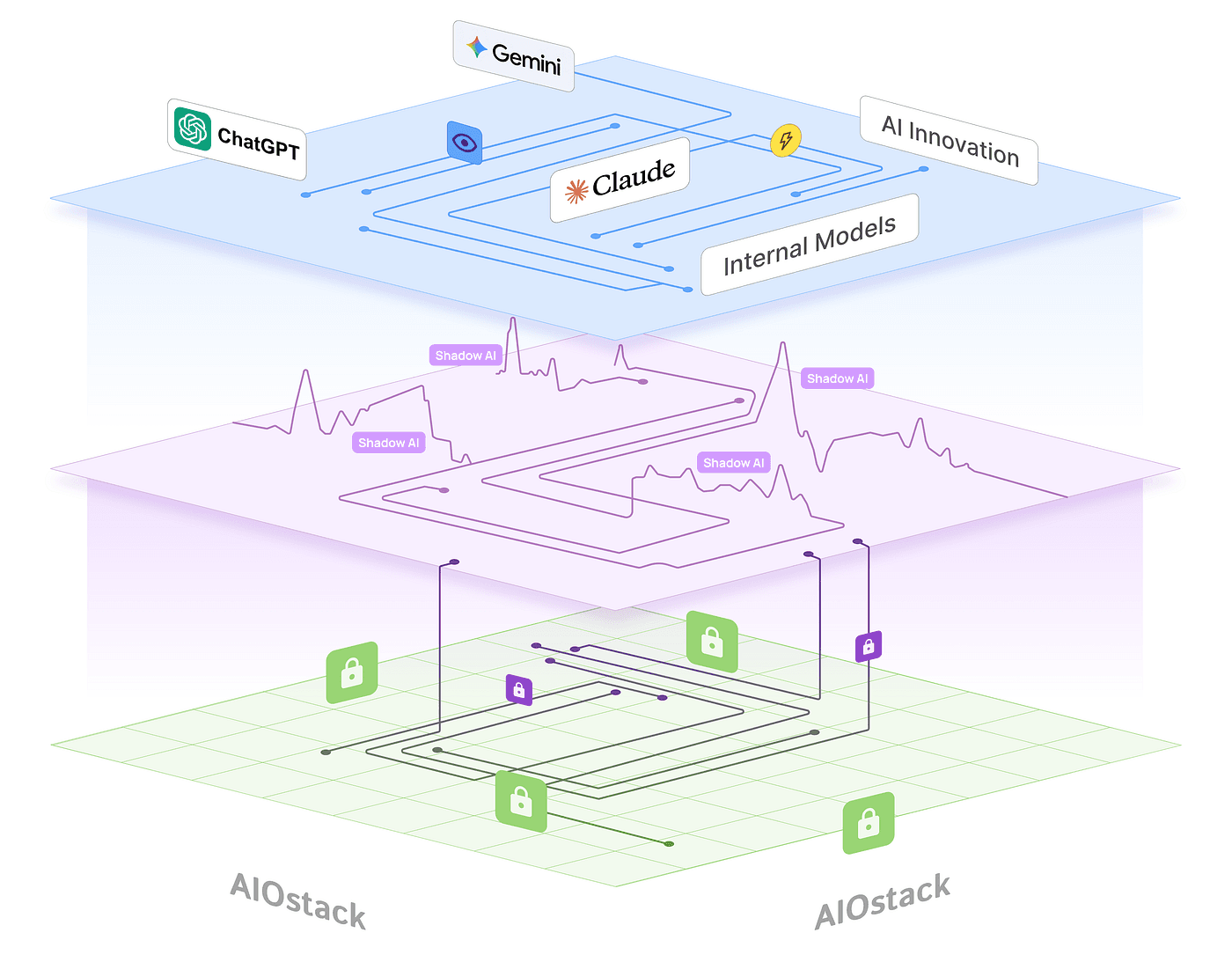

That is why we built AIOStack on eBPF.

eBPF (extended Berkeley Packet Filter) lets us observe network calls and data flows at the kernel level in your Kubernetes environment. We don’t need app-side agents or SDKs. We don’t need developers to instrument their code. We don’t need security teams to manually chase every new endpoint.

AIOStack automatically discovers every AI service running in your environment. It decodes modern AI protocols, MCP (Model Context Protocol), ACP (Agent Communication Protocol), and A2A (Agent-to-Agent communication), and gives you instant visibility into:

All of this happens continuously in the background, without blocking deployments and without adding measurable latency to your AI workloads.

No tickets. No code changes. Just a clear, live picture of what your AI stack is actually doing.

Every AI-native startup is being asked to do two hard things at once:

The companies that figure out both will win. The ones that do not will either:

We refuse to accept that trade-off.

That is why we are launching AIOStack Community Edition, a free distribution built on an open core model. We want AI-native companies to grow fast, scale confidently, and walk into enterprise security conversations with receipts before they can afford a six-figure security platform, and we want enterprises to stop spending months in POCs or millions of dollars just to unblock their AI adoption.

The community edition gives you:

No sales calls. No procurement. No six-month pilot.

Just deploy AIOStack to your cluster and start seeing what is actually happening in your AI stack.

We are not bolting an “AI module” onto a legacy CSPM product. We are not sprinkling LLM scanning on top of an old security platform.

We are building a security layer designed from the ground up for the AI-native era.

Because AI security is not just “security, but for AI”:

AI security needs to be autonomous, adaptive, and invisible. It needs to match the speed of AI development. It needs to give security teams deep visibility without turning them into the bottleneck. And it needs to let AI teams do what they do best, build incredible products, without compromising on safety.

That is what AIOStack is. That is why we built it. And that is why we are making Aurva AIOStack freely available via community edition.

Get started with AIOStack Community Edition → https://github.com/aurva-io/AIOstack

Read More on → https://aurva.ai/

Join our Slack community → https://join.slack.com/t/aiostack-aurva/shared_invite/zt-3idcrvhdx-3ExhxVeMTGjYsqPgKPDd_Q

Read the technical deep dive → (coming soon)

The future is AI-native. The future of security needs to be too.

—

Apurv

Founder, Aurva

Forever an AI Engineer

USA

AURVA INC. 1241 Cortez Drive, Sunnyvale, CA, USA - 94086

India

Aurva, 4th Floor, 2316, 16th Cross, 27th Main Road, HSR Layout, Bengaluru – 560102, Karnataka, India

PLATFORM

Access Monitoring

AI Security

AI Observability

Solutions

Integrations

USA

AURVA INC. 1241 Cortez Drive, Sunnyvale, CA, USA - 94086

India

Aurva, 4th Floor, 2316, 16th Cross, 27th Main Road, HSR Layout, Bengaluru – 560102, Karnataka, India

PLATFORM

Access Monitoring

AI Security

AI Observability

Integrations

USA

AURVA INC. 1241 Cortez Drive, Sunnyvale, CA, USA - 94086

India

Aurva, 4th Floor, 2316, 16th Cross, 27th Main Road, HSR Layout, Bengaluru – 560102, Karnataka, India

PLATFORM

Access Monitoring

AI Security

AI Observability

Integrations